In my role as VP of Design Strategy at SAP SuccessFactors, I am constantly involved in driving innovation in Human Capital Management (HCM). In my previous blog posts, I have shared my experiences in helping teams utilize frameworks like Jobs-to-be-Done (JTBD) to make user-centric product decisions. One common question is whether SAP SuccessFactors has a catalog of Jobs-to-be-Done specifically for AI, a question recently raised by my colleague, Caitlynn Sendra. This question reflects a concern I have – the tendency to see AI as a solution in search of a problem. However, JTBD is technology-agnostic and focuses on the fundamental reasons behind the goals Job Performers are aiming to achieve.

This got me thinking: is AI, often hailed as a revolutionary solution, at risk of becoming a shiny object searching for a problem to solve? Can AI, with its vast potential, truly align with the user-centric core of the JTBD framework? The answer, as we’ll explore in this blog post, is a resounding yes. But here’s the key: JTBD acts as a powerful filter for AI. It ensures that AI isn’t just another trendy buzzword, but a well-considered tool that enhances the user experience by addressing core user needs in a meaningful way.

Throughout this post, we’ll delve into how JTBD can guide the strategic use of AI. We’ll explore how AI can be leveraged to fulfill the Jobs identified within your meticulously crafted job maps, transforming them from simply understanding user needs to create innovative solutions that truly resonate with your target audience

TL;DR;

- JTBD Uncovers the Why: Forget features; JTBD drills down to the core reasons behind user behavior. What problems are they trying to solve (Jobs)? This unveils the fundamental user needs your solution should address.

- AI Fulfills the How: Once you understand the Jobs, AI empowers you to address them effectively. It can automate tasks, personalize experiences, analyze data, and provide intelligent recommendations—all tailored to user needs.

- The User-Centric Advantage: The JTBD-AI combo ensures you’re building the right thing – solutions that resonate with users. AI becomes a tool to fulfill user needs, not a technology implemented for its own sake.

- Prioritize for Impact: Not all AI solutions are equal. Focus on those with the biggest impact. Use a systematic prioritization policy to prioritize high-impact, achievable solutions that deliver real value.

- Consider Ethical Implications: AI algorithms can perpetuate biases in training data, leading to unfair outcomes. Proactively addressing these concerns ensures that your AI solutions are effective, responsible, and trustworthy.

Introduction

In the ever-evolving landscape of product development, understanding user needs remains paramount. The Jobs-to-be-Done (JTBD) framework has established itself as a powerful tool for uncovering the core reasons behind user behavior. As explored in our previous post on crafting customer-centric roadmaps, JTBD goes beyond surface-level features, pinpointing the fundamental problems users are trying to solve (their Jobs). It allows us to shift our focus from what users want (features) to why they need them (Jobs).

Within the complex process of product development, the cooperation between product teams and business stakeholders is a crucial element that connects strategic objectives with practical insights. It is vital to facilitate meaningful discussions in this area to ensure that the diverse perspectives of stakeholders align seamlessly with the overall product vision. The Jobs-to-be-Done (JTBD) framework plays a central role in this collaborative effort by providing a structured approach that goes beyond individual preferences or biases. By focusing on the fundamental goals that users seek to achieve, JTBD enables a shared understanding that bridges the gap between the intricacies of product design and the broader business objectives.

Avoiding Shiny-Object Syndrome with Jobs-to-be-Done

Technology research firm Gartner, Inc. has estimated that 85% of artificial intelligence (AI) and machine learning (ML) projects fail to produce a return for the business. The reasons often cited for the high failure rate include poor scope definition, bad training data, organizational inertia, lack of process change, mission creep and insufficient experimentation (Vartak, M. Achieving next-level value from AI by focusing on the operational side of machine learning, 2023).

We will cover some of the reasons why AI projects fail in this post, but the risk that Jobs-to-be-done mitigates the most is scope definition: JTBD’s strength lies in its focus on uncovering the core needs and motivations driving user behavior (Jobs-to-be-Done). It compels us to look beyond features and functionalities and understand the fundamental problems users are trying to solve.

The Jobs-to-be-Done (JTBD) methodology is a valuable tool that facilitates investment discussions with business stakeholders. It acts as a compass, directing conversations toward the most critical outcomes, all viewed through the lens of the job performer. As explained in an earlier blog post, a “job” in the JTBD framework refers to a goal or objective that exists independently of any specific solution. This independence is crucial to understanding that the service or product is simply a means to an end, and the focus should be on identifying the job that customers are trying to accomplish rather than the features of the product or service itself.

Facilitating Investment Discussions in Strategy

Learn more about facilitating investment discussions by finding objective ways to value ideas, approaches, solutions to justify the investment on them (Photo by Pixabay on Pexels.com)

A key aspect of this approach is to focus on aligning with the critical objectives from the job performer’s perspective. As stated by Anthony Ulwick in his book What Customers Want, the JTBD framework is centered on people’s objectives, regardless of the methods used to achieve them. This perspective offers a structured and insightful way to understand customer needs, which in turn allows for more accurate predictions of future customer actions.

In the outcome-driven paradigm the focus is not on the customer, it is on the job: the job is the unit of analysis. When companies focus on helping the customer get a job done faster, more conveniently, and less expensively than before, they are more likely to create products and services that the customer wants.

Ulwick, A. W., What customers want (2005)

From that perspective, there are a few ways that my colleagues and I have been taking full benefit of the advantages of using Jobs to be Done to create a shared understanding of product outcomes and customer outcomes:

- Discuss problems instead of solutions: because Jobs to be Done are a great way of describing Customer and/or User Needs independent of Solutions, they are — directly or indirectly — a great way to help create the mindset (that designers keep complaining about that lack in product teams) of understanding the problem space before jumping to solutions.

- Provide clarity and scope of product discovery activities: because uncovering Jobs to be Done requires clarity about who are the job performers we need to focus our research on, and what are the things these job performers are trying to get done, it makes it a lot easier for us to prepare for research, especially when it comes to recruiting research participants and what are the problems we need to probe.

- Provide a great way to synthesize discovery findings: if you know me, you know that my design and research philosophy is to get everyone involved during the processing of research data (instead of long reports for stakeholders to read out about research). But even when you do the processing of the research data together with the team, Jobs to be Done are great for synthesizing research outputs (e.g..: user needs, goals, success criteria) in a format that is easy to consume — for example — with Job Stories (coming up next)!

These grounding principles ensure that any technology we consider, including AI, is not just a cool feature but a targeted solution that addresses a real user need.

AI is a Powerful Tool, Not a Magic Bullet

AI offers a vast toolbox of functionalities – automation, personalization, data analysis, and intelligent recommendations. However, simply throwing AI at a problem won’t guarantee success. Here’s how JTBD helps us leverage AI effectively:

- Identifying the Right Use Case: JTBD helps us pinpoint the specific Jobs users are struggling with. This allows us to determine if AI is even the right tool for the job. For example, a simple to-do list app might not need AI, while a complex financial planning platform could benefit from AI-powered investment recommendations.

- Defining Success Metrics: By understanding the Job Performer’s needs, we can define clear success metrics for the AI solution. This ensures we’re measuring its effectiveness based on its ability to address the core user needs, not just fancy technical capabilities.

Avoiding AI for AI’s Sake

The “technology for technology’s sake” pitfall happens when we get caught up in the excitement of AI’s potential without considering if it truly solves a user problem. JTBD helps us avoid this trap by:

- Prioritizing User Needs Over Technology: The JTBD framework keeps the focus on the user. We first identify the Jobs, then explore potential solutions – and AI is just one option on the table. It’s chosen only if it demonstrably addresses the user need more effectively than other solutions.

- User Testing and Validation: JTBD — when combined with other humans centered methods — promotes iterative development and validation. This allows us to identify if the AI solution fulfills the user’s Job and refine it if necessary. Like any other feature, AI shouldn’t be implemented in a vacuum.

Understanding Needs and Fulfilling Them with Intelligence

Imagine you’re building a new product. You could throw cutting-edge technology at the problem, hoping it sticks. But often, this kind of “solution-first” approach misses the mark. Here’s where Jobs-to-be-Done (JTBD) comes in.

JTBD Uncovers the “Why”

Clayton Christensen credits Ulwick and Richard Pedi of Gage Foods with the way of thinking about market structure used in the chapter “What Products Will Customers Want to Buy?” in his Innovator’s Solution and called “jobs to be done” or “outcomes that customers are seeking”.

A customer’s job could be the tasks that they are trying to perform and complete, the problems they are trying to solve, or the needs they are trying to satisfy. (Osterwalder, A., Pigneur, Y., Papadakos, P., Bernarda, G., Papadakos, T., & Smith, A. Value proposition design, 2014).

What is the job performer trying to achieve? A job is a goal or an objective independent of your solution. The aim of the job performer is not to interact with your company but to get something done. Your service is a means to an end, and you must first understand that end.

Kalbach, J., Jobs to be Done Playbook (2020)

Because they don’t mention solutions or technology, jobs to be done should be as timeless and unchanging as possible. Ask yourself “How would people have gotten the job done 50 years ago?” Strive to frame jobs in a way that makes them stable, even as technology changes. (Kalbach, J. Jobs to be Done Playbook, 2020).

Distinguish between three main types of customer jobs to be done and supporting jobs (Osterwalder, A., Pigneur, Y., Papadakos, P., Bernarda, G., Papadakos, T., & Smith, A. Value proposition design, 2014):

- Functional Jobs: When our customers try to perform and complete a specific task or solve a specific problem

- Social Jobs: when our customers want to look good or gain power and status. These jobs describe how customers want to be perceived by others

- Personal/Emotional Jobs: When our customers seek a specific emotional state, such as feeling good or secure.

JTBD acts as a powerful tool for understanding the “why” – the fundamental needs and motivations driving user behavior. It delves deeper than simply identifying features users want. Instead, JTBD focuses on the underlying problems users are trying to solve (the Jobs).

For example, let’s say you’re building a fitness app. JTBD wouldn’t focus on fancy exercise routines, but rather on the user’s core need – staying fit and healthy. This could manifest in various ways: the desire to lose weight, gain muscle, or simply maintain a healthy lifestyle.

AI Fulfills the “How”

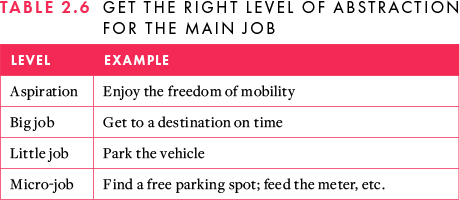

Goals are hierarchical. Through a process of laddering, you can roll up objectives to different levels. The goal at one level may be a stage for the next. In JTBD, there are four levels to consider (Kalbach, J. Jobs to be Done Playbook, 2020):

- Aspirations: An ideal change of state, something the individual desires to become

- Big Job: A broader objective, typically at the level of the main job

- Little Job: A smaller job that corresponds roughly to stages in a big job

- Micro-Job: Activities that resemble tasks, but are formulated in terms of JTBD

Getting the right level of abstraction is critical. Ask “why?” to move up in the JTBD hierarchy and ask “how?” to move down.

Kalbach, J. Jobs to be Done Playbook (2020).

When working with JTBD, you’ll confront the issue of granularity. The question you need to answer is, “At what level of abstraction do you want to try to innovate?” There’s no right or wrong answer—it depends on your situation and aim. Getting the right altitude is key. Objectives at one level roll up into higher-order goals, generally called laddering. In JTBD work, the principle of laddering applies as well. For instance, in his book Competing Against Luck, professor Clayton Christensen points to “big jobs,” or things that have a big impact on our lives (like finding a new career) and “little jobs,” or things that arise in our daily lives, such as passing time while waiting in line (Kalbach, J. Jobs to be Done Playbook, 2020).

Going back to the “People don’t want a quarter-inch drill, they want to hole in the wall” example, being able to navigate the levels of granularity through abstraction laddering can really help get to the heart of why functional jobs exist and understand what customers and users perceive as real value:

- People don’t want a quarter-inch drill, they want the hole on the wall… but why?

- People don’t want just the whole on the wall, they want to hang a picture on the wall… but why?

- People don’t want just to hang a picture on the wall, they want their house to look pretty… but why?

- People don’t want just to make their house look pretty, they want to impress their friends… but why?

- People don’t just want to impress their friends, they want to be perceived as someone with good taste… but why?

You get the idea!

Keeping your work at the appropriate granularity can be tricky, but part of the territory. Sometimes, you need to know the broadest possible jobs—how customers want to change their lives. Other times, you’ll be operating at a lower level with a narrower scope (Kalbach, J. Jobs to be Done Playbook, 2020).

Here is — yet again — another advantage of Jobs to be Done: we can use abstraction laddering to help teams transition from what users are trying to get done (the “why”s) to the solution (the “how”s).

Bringing Business Impact and User Needs together with Jobs to be Done (JTBD)

Jobs to be Done work as a great common exchange currency between leadership, designers, product managers and developers by helping us discuss problems instead of solutions (Photo by Blue Bird on Pexels.com)

Artificial intelligence (AI) isn’t just about creating intelligent machines; it’s about unlocking new ways to address fundamental human needs. The JTBD framework provides a roadmap for understanding these needs, and AI offers a powerful toolkit to fulfill them in innovative ways. Imagine AI automating tedious tasks within a product that directly address a user’s Job-to-be-Done (e.g., scheduling appointments), or personalizing experiences that anticipate a user’s needs before they even arise. When AI is strategically aligned with JTBD insights, it’s not just hype; it becomes a powerful force for creating user-centric solutions that fundamentally change how we interact with technology in a way that truly serves us.“

Here’s how AI is poised to revolutionize this landscape:

- From Explicit Commands to Natural Interaction: AI is moving us beyond the limitations of clicking buttons and typing commands. Imagine voice-activated interfaces that understand natural language, allowing us to interact with technology in a more intuitive and conversational way. Think of a smart home system that responds to simple voice commands to adjust lighting or temperature.

- Personalized Experiences, Tailored to You: AI excels at personalization, analyzing user data to understand preferences and behaviors. This translates to a user experience that feels custom-made. For instance, an AI-powered news feed can curate articles based on your interests, or a streaming service can recommend movies you’ll genuinely enjoy.

- Proactive Assistance and Predictive Power: AI can anticipate user needs before they even arise. Imagine a fitness tracker that suggests workouts based on your daily activity level or a virtual assistant that reminds you to buy groceries when supplies run low. AI’s predictive capabilities empower technology to be proactive, seamlessly integrating into our lives and assisting us in achieving our goals.

- Automation that Saves Time and Effort: Repetitive tasks that were once tedious chores can be automated by AI.This could involve scheduling meetings, managing finances, or generating reports. By automating these tasks, AI frees up our time and allows us to focus on more important matters.

- Accessibility for Everyone: AI can break down barriers and make technology more accessible for everyone. For example, AI-powered text-to-speech can help visually impaired users navigate websites, and voice recognition tools can empower users with physical limitations to interact with technology hands-free.

Case Studies of AI

Although I cannot confirm whether these companies explicitly use JTBD to guide their product decisions, let’s analyze some compelling examples of how leading organizations are leveraging AI to address user needs that align with classic JTBD principles.

The analysis will showcase how AI functionalities potentially address core user needs, even without direct confirmation of a JTBD framework being used. By examining these examples, we can gain valuable insights into the powerful synergy between JTBD and AI in creating user-centric solutions.

Netflix: Personalized Recommendations

- Job to be Done: Users struggle to find movies and shows they’ll enjoy on streaming platforms with overwhelming options.

- JTBD Approach: Netflix analyzes user behavior (watch history, ratings) and leverages AI to recommend personalized content, addressing the job of finding something to watch efficiently and effortlessly.

Intuit TurboTax Automating Tax Filing

- Job to be Done: Filing taxes can be complex and time-consuming, leading to frustration and errors.

- JTBD Approach: Intuit TurboTax uses AI to automate data entry, deductions, and calculations, addressing the job of filing taxes accurately with minimal effort.

Spotify Personalized Playlists

- Job to be Done: Users want to discover new music that aligns with their tastes but lack the time or resources to explore extensively.

- JTBD Approach: Spotify leverages AI to analyze user listening habits and generate personalized playlists, addressing the job of discovering new music efficiently and enjoying a curated listening experience.

Duolingo Personalized Learning Paths

- Job to be Done: Learning a new language takes time and dedication, with traditional methods often inflexible and impersonal.

- JTBD Approach: Duolingo utilizes AI to personalize learning paths based on user progress, strengths, and weaknesses, addressing the job of learning a new language effectively and in an engaging way.

Amazon Predictive Replenishment

- Job to be Done: Consumers want to ensure they have essential household items but struggle to keep track of inventory and reorder on time.

- JTBD Approach: Amazon’s “Subscribe & Save” program uses AI to predict user needs and automatically reorder frequently used items, addressing the job of never running out of essentials with minimal effort.

These examples showcase how AI, when informed by JTBD, can go beyond mere features to address core user needs. By focusing on the “what” (user needs) and leveraging AI to address the “how” (fulfilling those needs), companies create innovative solutions that resonate with users and drive business success

However, it’s important to acknowledge that AI is still evolving. While the potential for revolutionizing user interaction is immense, responsible development and ethical considerations are crucial. The key lies in harnessing AI’s power to truly understand user needs and create technology that seamlessly integrates into our lives, empowering us, not replacing us.

Please note that — for the purpose of this blog post — I’m distinguishing between using AI to discover jobs and using AI to fulfill identified jobs. In a previous post, I mentioned that JTBD remains the primary method for uncovering user needs (Jobs-to-be-Done). AI, in this context, acts as a powerful tool to address those already identified needs within the job map. In the next sections, we will focus on how AI can enhance existing job maps, not replace user research in discovering new jobs

Bringing JTBD and AI Together

By combining JTBD’s user-centric approach with AI’s technological capabilities, we can create products and services that truly resonate with users. JTBD ensures we’re building the right thing—solutions that address the core user needs. AI empowers us to build those solutions smarter and more effectively, providing a personalized and intelligent experience that fulfills the user’s job in the most impactful manner. This emphasizes the complementary nature of JTBD and AI. They work together to create a powerful framework for user-centric innovation.

This section will delve into how AI can transform static needs into dynamic solutions when informed by the insights gleaned from JTBD job maps (as discussed in our previous post). We’ll explore how AI functionalities can automate tasks, personalize experiences, and provide intelligent recommendations tailored to address the Jobs identified within your meticulously crafted job maps. This fusion of JTBD and AI empowers you to move beyond simply understanding user needs to create innovative solutions that truly resonate with your target audience.

Step 1: Identify Key Job Needs

For this first step, I recommend you take a look at my previous post, where I introduced a specific type of Alignment Diagram called Job Map. For the purpose of this post, here is what you need to know to bring JTBD and AI together:

In my practice of facilitating strategy discussions, the integration of Jeff Patton’s User Story Mapping, the hierarchical insight from Jim Kalbach’s Jobs-to-be-Done (JTBD), and the abstraction laddering methodology advocated by Daniel Stillman manifests a holistic framework for comprehensive product development.

As Patton’s User Story Map progresses from the broad strokes of “activities or scenarios” to the refined details of “details or user stories,” Kalbach’s JTBD hierarchy complements this journey with “functional jobs,” “social jobs,” and “emotional jobs.” Aligning these with Stillman’s abstraction laddering, jobs seamlessly substitute for the “whys,” encapsulating the core motives and user needs, while User Stories become adept substitutes for the “hows,” translating those motives into tangible functionalities.

Stillman’s emphasis on shared goals, visualized through concentric circles and abstraction ladders, finds resonance in the collaborative aspects of User Story Mapping and the nuanced layers of JTBD, creating a unified framework that harmoniously blends the why, what, and how of product development. This synthesis provides a robust interface for stakeholders to collaboratively navigate the complexities of strategy and execution collaboratively, ensuring a shared vision and clarity in goals, thus propelling the product development journey forward.

Crafting Customer-Centric Roadmaps with Jobs-to-be-Done

With a combination of User Story Mapping, Jobs-to-be-Done hierarchy, and Abstraction Laddering to facilitate strategy discussions, we can translate user needs into product functionalities while emphasizing shared goals and enabling stakeholders to navigate product development with clarity and a shared vision. (Photo by Sébastien BONNEVAL on Pexels.com)

Here’s where it becomes the foundation for leveraging AI. Let’s dive deeper into this first step:

- Revisiting the Job Map: Pull up your job map, a visual representation of the user’s journey in a specific situation.This map details the stages, frustrations, and desired outcomes associated with the job-to-be-done.

- Identifying Central Needs: Analyze each stage of the job map with a critical eye. What are the core needs and frustrations the job performer experiences at each step? Are they struggling to find information, complete a task efficiently, or stay motivated

- Prioritizing Needs: Not all needs are created equal. Some will be more critical to the successful completion of the job than others. Prioritize the central needs that have the most significant impact on the user’s experience.

Here are some techniques to help you identify key job needs:

- Focus on the “Why”: Don’t just identify the actions the user takes (e.g., searching online). Explore the underlying reasons behind those actions (e.g., seeking information to make a purchase decision).

- Look for Emotional Cues: The job map might reveal emotional frustrations or anxieties users experience during specific stages. These emotional cues are powerful indicators of unmet needs

- Consider Frequency: How often does a particular need arise in the job map? Frequent needs are likely more central to the user’s experience and warrant prioritization.

Step 2: Explore AI Applications

Now that you’ve identified the central needs – the Jobs-to-be-Done (JTBD) – of your job performers through your job map (Step 1), it’s time to unleash the power of AI! Remember those yellow cards in your job map that represent potential solutions? Here, we’ll explore how AI applications can fill those yellow cards, focusing on four key areas: automation, personalization, data analysis, and intelligent recommendations. Each of these areas holds immense potential to address the core needs you’ve uncovered, transforming your static job map into a roadmap for user-centric AI-powered solutions.

However, it’s crucial to remember that building trust is paramount throughout this process. As John Maeda emphasizes in The Laws of Simplicity, user comfort with AI is key.

How comfortable are users about the computer knowing how they think, and how tolerant they will be if (and when) the computer makes a mistake guessing their desires?

Maeda, J., The laws of simplicity (2020)

We’ll explore how these AI functionalities can be designed with simplicity in mind, ensuring clear communication, transparency, and user control. By prioritizing these principles, you can ensure your AI solutions not only address user needs effectively but also foster trust and enhance the overall user experience.

Brainstorming AI Solutions

Armed with a deep understanding of user needs through JTBD job maps, and the potential of AI as a fulfillment tool, it’s time to unleash your creative spark! This section dives into the exciting world of brainstorming AI solutions. We’ll explore how to leverage the insights from your job maps to generate innovative ideas for integrating AI functionalities.

- Gather the Team: Assemble a diverse team with expertise in design, development, and user research. A collaborative environment fosters creative solutions.

- Focus on the Needs: Keep the central needs identified from the job map at the forefront of the discussion. Each proposed AI application should directly address a specific user need.

- Think Outside the Box: Don’t be afraid to explore unconventional uses of AI.

Exploring AI Capabilities

Drawing upon my experience as VP of Design Strategy at SAP SuccessFactors, I have a front-row seat to the innovative ways AI is transforming the Human Capital Management (HCM) landscape. In this role, I lead the charge in crafting our product design vision for the entire HCM product line. This involves ensuring a cohesive narrative across our offerings and establishing best practices and strategic principles that align with our vision for the future of work. Here’s the exciting part: these principles often intersect beautifully with the potential of AI!

Now, let’s delve into the four key areas of AI application (automation, personalization, data analysis, and intelligent recommendations) and brainstorm specific examples, drawing inspiration from real-world challenges faced by HCM professionals. By analyzing these hypothetical scenarios, we can illustrate how AI has the potential to address core user needs, even if it’s not always explicitly framed within a JTBD framework

Automation of Repetitive Tasks

Identify repetitive tasks that hinder the job performer’s progress. Can AI automate these tasks, freeing up the user’s time and cognitive load? Here are some examples:

- Repetitive Tasks in Payroll: AI can automate tedious and time-consuming payroll tasks like data entry, time sheet verification, and generating reports. This frees up HR professionals to focus on more strategic initiatives.

- Candidate Screening in Recruiting: AI-powered resume screening can automate the initial stages of candidate selection, filtering resumes based on pre-defined criteria and identifying qualified candidates based on keywords and skills. This allows recruiters to spend more time interviewing top prospects.

Personalization & Tailored Experiences

Leverage AI to personalize the user experience based on individual needs and preferences, such as:

- Learning & Development: AI can personalize employees’ learning experiences. It can recommend relevant training materials and create personalized learning pathways by analyzing individual learning styles, strengths, and weaknesses.

- Employee Onboarding: An AI-powered onboarding program can personalize the onboarding experience for new hires. This could involve tailoring onboarding content, creating personalized welcome messages, and suggesting relevant resources based on the employee’s role.

Data Analysis to Uncover Hidden Insights

Utilize AI to analyze user data from the job map and identify hidden patterns and insights.

- Predictive Analytics for Talent Management: AI can analyze HR data to identify potential talent risks, such as employee turnover or performance issues. This allows HR to retain high performers and address potential problems proactively.

- Employee Sentiment Analysis: By analyzing employee communications and surveys, AI can identify employee sentiment and potential areas of dissatisfaction. This helps HR address concerns and cultivate a more positive work environment.

Intelligent Recommendations & Proactive Assistance

AI can anticipate user needs and proactively offer solutions through intelligent recommendations.

- Learning a new skill: Based on user progress, an AI-powered learning platform might recommend supplementary resources or suggest connecting with a human coach for additional support.

- Performance Management Feedback: Implementing AI-powered chatbots can facilitate performance management by prompting managers to provide regular feedback and offering suggestions for improvement based on employee performance data.

- Career Path Suggestions: AI can analyze employee skillsets and career aspirations to recommend suitable internal job openings or suggest relevant training programs to help them progress within the organization.

Not Too Human

Both Noessel (Designing Agentive Technology, 2017) and Bucher (Engaged: Designing for Behavior Change, 2020) emphasize that imbuing AI with human-like characteristics can enhance user trust and engagement, but it should be employed thoughtfully:

- Human-Like Characteristics: Infusing AI-powered products with human-like traits and behaviors resonates with users’ innate trust in human interactions.

- Striking the right balance: Imbuing the system with human-like attributes, both also clearly communicating the system’s capabilities is crucial to building trustworthy experiences

- Familiarity and Relatability: Anthropomorphism fosters a sense of familiarity, making users more likely to embrace the technology as a trustworthy companion.

- Avoiding the Uncanny Valley: The uncanny valley effect arises when technology appears overly human-like but falls short of perfection, creating discomfort and mistrust.

Remember: This is just a starting point. The specific applications of AI will vary depending on the job map and the central needs you identified.

You should also consider the ethical implications of each AI application you brainstorm. For instance, how will data privacy be ensured when personalizing the user experience? We will cover ethical implications later in this post.

Step 3: Evaluate Opportunities

We’ve brainstormed exciting AI applications to address user needs identified in your job map (Step 2). Now, it’s time to evaluate their desirability and feasibility for creating maximum impact more rigorously. This step utilizes decision matrices, scorecards, and formulas to help you prioritize solutions that offer the greatest value and are most realistic to implement.

The Jobs-to-be-Done (JTBD) framework helps teams compare, contrast, and debate the value of ideas and solutions. Outcome-Driven Innovation (ODI) is a reliable approach to reduce subjectivity while assessing the value of opportunities.

Ulwick’s opportunity algorithm measures and ranks innovation opportunities based on importance and satisfaction. By using this algorithm, product managers can pinpoint opportunities in the market and make important targeting and resource-related decisions.

Opportunity Score = Importance + max(Importance – Satisfaction, 0)

Ulwick, A. W., What customers want (2005)

Outcome-Driven Innovation (ODI) is a reliable approach to reduce subjectivity while assessing the value of opportunities. Ulwick’s opportunity algorithm measures and ranks innovation opportunities based on importance and satisfaction. (Ulwick, A. W., What customers want, 2005).

An opportunity for improvement exists when an important outcome is underserved. This means when it has a high opportunity score, it merits the allocation of time, talent, and resources, as customers will recognize solutions that successfully serve these outcomes to be inventive and valuable.

Jobs and outcomes that are unimportant or already satisfied represent little opportunity for improvement and consequently should not receive any resource allocation. We say that an outcome is overserved when its satisfaction rating is higher than its importance rating. When a company discovers these overserved outcomes, it should consider the following three avenues for possible action: stop doing it, reduce investment in it, or improve it enough to create a new outcome.

In practical terms, the opportunity algorithm becomes a powerful tool for predicting where value migrates. This algorithm, as elucidated by Ulwick, allows product managers to pinpoint opportunities in the market at any given time and be the first to address them. This aligns seamlessly with the overarching goal of innovation: defining and delivering new solutions that continuously evolve each measure of value along its continuum, better satisfying the collective set of outcomes.

Moving Beyond Intuition with Quantitative Prioritization

While intuition plays a role, relying solely on “gut feeling” can be risky. Let’s explore some quantitative tools to guide your AI solution prioritization:

Decision Matrices

Here’s an example of how you can use a prioritization matrix to evaluate AI solutions:

| Feature (AI Application) | Effort (Technical Feasibility) | Impact (User & Business Value) | Priority |

|---|---|---|---|

| Personalized Learning Paths (HR) | Medium | High | High (Phased approach) |

| Automating Payroll Tasks (HR) | Low | High | Very High |

| AI-powered Candidate Screening (HR) | Medium | Medium | Medium (Pilot test before full integration) |

| Chatbot for Performance Feedback (HR) | Low | Medium | Medium (Consider for future development) |

Scorecards & Formulas

You can create a formula so that you can compare the Return of Investment (ROI) of proposed initiatives and derive a priority list. Scoring even job, them, feature idea, initiate or solution allows you to develop a scorecard ranking each against the others (“A formula for Prioritisation” in Product Roadmaps Relaunched, Lombardo, C. T., McCarthy, B., Ryan, E., & Connors, M., 2017).

In the example above, CN stands for Customer Needs, BO stands for Business Objectives, E stands for Effort, C stands for Confidence, and P stands for Priority.

Strategy and Prioritization

Learn how prioritization can facilitate good decisions by helping us focus on what is essential, steering us closer to our vision and goals (Photo by Breakingpic on Pexels.com)

By employing these quantitative prioritization techniques, you’ve gained valuable insights into the relative importance of identified Jobs-to-be-Done (JTBD) and potential AI solutions within your job map. But how can we translate these insights into actionable steps?

This is where the power of the job map truly shines. Remember the vertical axis of your job map, which often represents urgency or importance? Here’s where quantitative prioritization comes full circle. Move the “critical” opportunities,identified through your chosen metrics, to the top of the yellow cards column (the “Now”). These are the high-impact Jobs that deserve immediate attention and represent the most fertile ground for applying AI solutions. Jobs deemed less critical, but still valuable, can be placed in the “Next” section of your roadmap for future consideration. Finally, ideas categorized as “Later” can be revisited in the 3-to-5 year timeframe.

This visual representation on the job map fosters team alignment by clearly communicating which AI-powered solutions will have the most significant impact on user needs. By prioritizing ruthlessly, you ensure that your valuable resources are directed towards the Jobs that matter most, maximizing the potential return on your AI investment.

More than Numbers

Designers and strategists must engage with their business stakeholders to understand what objectives and unique positions they want their products to assume in the industry, and the choices they are making to achieve such objectives and positions.

If you clearly articulated the answer to the six strategic questions (what are our aspirations, what are our challenges, what will we focus on, what are our guiding principles, what type of activities), strategies can still fail — spectacularly — if you fail to establish management systems that support those choices.

A strategy must describe what you will do, including how you will measure and resource it. Strategy must clarify specific action.

Azzarello, P., “Concrete Outcomes” in Move: How decisive leaders execute strategy despite obstacles, setbacks, and stalls (2017)

While quantitative tools are valuable, remember to consider these additional factors:

- Strategic Alignment: Even a high-scoring solution might not be ideal if it doesn’t align with your overall business goals. Ensure the AI application supports your product or service’s strategic direction.

- Quick Wins vs. Long-Term Vision: Maintain a balance. Prioritize some high-impact, low-effort solutions for immediate gains while keeping long-term, high-effort solutions on the radar for future development.

- Ethical Considerations: As you evaluate AI solutions, consider potential ethical implications (e.g., data privacy,bias in algorithms). Ensure responsible development and use of AI.

Step 4: Prototype & Iterate

Step 3 helped you identify promising AI applications. Now, it’s time to test and iterate on those ideas before committing to full-fledged development.

Technology is awesome. It really is. It helps humans communicate, find old friends, work more effectively, have fun, find places, and oh-so-many other great things. In many cases, technology is also hard, time-consuming, and expensive to develop. In this step, you will need to find a way to solve a problem you want to solve with or without technology. Manual ways of solving problems are, without a doubt, inefficient, yet they will teach you a lot about what people want without actually developing any technology (Sharon, T., Validating Product Ideas, 2016).

Learning through experimentation has a number of benefits (Mueller, S., & Dhar, J., The decision maker’s playbook, 2019):

- It allows you to focus on actual outcomes: a successful project is not deemed successful because it is delivered according to a plan, but because it stood the test of reality.

- It decreases re-work: because the feedback cycles are short, potential errors or problems are spotted quickly and can be smoothed out faster than conventional planning.

- It reduces risks: because of increased transparency throughout the implementation process, risks can be better managed than in conventional projects.

One way to help the team think through experiments is to think about how are we going to answer the following questions (Croll, A., & Yoskovitz, B. Lean Analytics. 2013):

- What do you want to learn and why?

- What is the underlying problem we are trying to solve, and who is feeling the pain? This helps everyone involved have empathy for what we are doing.

- What is our hypothesis?

- How will we run the experiment, and what will we build to support it?

- Is the experiment safe to run?

- How will we conclude the experiment, and what steps will be taken to mitigate issues that result from the experiment’s conclusion?

- What measures will we use to invalidate our hypothesis with data? Include what measures will indicate the experiment isn’t safe to continue.

What’s important to understand is that testing rarely means just building a smaller version of what you want to sell. It’s not about building, or selling something. It’s about testing the most important assumptions, to show this idea could work. And that does not necessarily require building anything for a very long time. You need to first prove that there’s a market, that people have jobs with real pains and gains, and that they’re willing to pay (Bland, D. J., & Osterwalder, A., Testing business ideas, 2020).

A strong assumption test simulates an experience, giving your participant the opportunity to behave either in accordance with your assumption or not. This behavior is what allows us to evaluate our assumptions (Torres, T., Continuous Discovery Habits, 2021).

To construct a good assumption test, you’ll want to think carefully about the right moment to simulate (Torres, T., Continuous Discovery Habits, 2021).

You don’t want to simulate any more than you need to. This is what allows you to integrate quickly through several assumption tests.

Torres, T., Continuous Discovery Habits (2021)

With any new business experiment, you need to ask yourself how quickly you can get started and how quickly it produces insights. For example, an interview series with potential customers or partners can be set up fairly quickly. Launching a landing page and driving traffic to it can be done with even greater speed. You’ll generate insights quickly. A technology prototype on the other hand will take far more time to design and test. Such a prototype might gather a good understanding of user behavior, but it will require more time to generate insights (Bland, D. J., & Osterwalder, A., Testing business ideas, 2020).

More and more organizations test their business ideas before implementing them. The best ones perform a mix of experiments to prove that their ideas have legs. They ask two fundamental questions to design the ideal mix of experiments:

- Speed: How quickly does an experiment produce insights?

- Strength: How strong is the evidence produced by an experiment?

Before jumping to an experiment, it is important to consider the key principles of rapid experimentation when testing a new business idea (Bland, D. J., How to select the next best test from the experiment library, 2020):

- Go cheap and fast early on in your journey – Don’t spend a lot of money, if possible early on. You are only beginning to understand the problem space, so you don’t want to spend money when you can learn for free. Also, try to move quickly to learn fast instead of slow and perfectly.

Quite often, I am asked, “how many experiments should I run”? This is a very hard question to answer as it depends on so many variables. But a general rule of thumb is 12 experiments in 12 weeks. 1 experiment a week is a good pace to keep up the momentum, and 12 data points are typically a good checkpoint to come back and reassess your business model. - Increase the strength of evidence with multiple experiments for the same hypothesis – Don’t hesitate to run multiple experiments for the same hypothesis. Rarely do we witness a team that only runs one experiment and uncovers a multimillion-dollar opportunity. The idea here is to not get too excited or too depressed after running one experiment. Give yourself permission to run multiple to understand if you have genuinely validated your hypothesis.

Remember, a typical business model may have several critical hypotheses you need to test, and for each hypothesis, you may need to run multiple experiments to validate it. Refer to our previous blog post on Assumptions Mapping to learn how to define your hypotheses and determine which ones to run first. - Always pick the experiment that produces the strongest evidence, given your constraints – Not every experiment applies to every business. B2B differs from B2C, which differs from B2G. A 100-year-old corporation’s brand is much more important than a 100-hour-old startup’s brand. Pick an experiment that produces evidence, but don’t risk it all. Make small bets that are safe to fail.

When working with corporate innovators, the phrase I most often hear is, “we can’t do that.” Innovators often feel hamstrung when working in heavily regulated industries. Many may choose to bypass testing certain hypotheses because of the constraints. This is not something I would recommend. If you really cannot test a hypothesis, the last resort would be to consider pivoting your business model. Remember, a testable idea is always better than a good idea. - Reduce uncertainty as much as you can before you build anything – In this day and age, you can learn quite a bit without building anything at all. Deferring your build as long as possible because it is often the most expensive way to learn.

However, prototyping, while valuable for traditional interfaces, have limitations when it comes to AI-powered features.

Challenges of Prototyping for AI

The Jobs-to-be-Done (JTBD) framework offers a unique advantage in the world of product development. It acts as both a divergent and convergent force. On one hand, it helps us generate a wide range of ideas by encouraging us to explore the various “Jobs” users are trying to accomplish. On the other hand, it provides a powerful convergence mechanism by ensuring those ideas ultimately address real human needs.

However, from the perspective of design thinking and interaction design, it’s crucial to acknowledge the limitations designers might face during the prototyping phase when working with JTBD and AI. While JTBD excels at identifying user needs, prototypes can struggle to simulate complex AI interactions effectively.

Beyond Static Mockups

One key challenge of low-fidelity prototyping for AI lies in capturing the dynamic and nuanced nature of AI interactions.Unlike a static mockup of a button or a form, AI algorithms often exhibit complex decision-making processes and adapt their behavior based on user input and data. Here’s why this poses a challenge:

- Limited Ability to Simulate AI Behavior: Low-fidelity prototypes typically rely on pre-defined scenarios or scripted interactions. This might not adequately represent the full range of possibilities or the adaptability of a real AI system. Users might struggle to grasp the true potential and limitations of the AI if the prototype cannot showcase its ability to handle unexpected situations or learn from past interactions.

- Difficulty Conveying Algorithmic Reasoning: Understanding the “why” behind an AI recommendation or decision can be crucial for user trust and acceptance. Prototypes often lack the ability to convey the logic or reasoning process employed by the AI, potentially leaving users confused about how the AI arrived at its conclusions.

Bridging the Gap Between Prototype and Reality

Another hurdle arises from the data requirements of true AI functionality. Many AI algorithms rely on vast amounts of real-world data to train and function effectively. However, during the prototyping stage, such data might not be readily available or collecting it might be impractical. This creates a gap between the prototype and a fully functional AI system:

- Limited Training Data: Low-fidelity prototypes often lack the rich datasets needed to test complex AI features. This can lead to inaccurate or unrealistic simulations of how the AI would perform in a real-world environment with actual user data. Users might be exposed to a limited set of AI interactions that wouldn’t reflect the full capabilities of the system once properly trained.

- Inability to Test Edge Cases: Real-world data often contains unexpected scenarios and edge cases that can expose flaws or limitations in AI algorithms. Without access to this data during prototyping, it can be difficult to assess how the AI will handle these situations and ensure its robustness and reliability in the final product.

These challenges in complexity and data requirements highlight the need for creative solutions when prototyping AI-powered features within a low-fidelity framework. The following sections will explore alternative approaches and considerations to bridge this gap and ensure effective user testing, even in the face of these limitations.

Alternative Testing Methods for Non-Coders

Despite these limitations, there are still ways to test and validate your AI ideas:

- Wizard-of-Oz Testing: Simulate the AI functionality “behind the scenes.” A team member acts as the “wizard” manually providing outputs based on pre-defined rules, mimicking how the AI would respond. This allows user testing of the interaction flow and user experience.

- User Scenarios & Storyboards: Create detailed user scenarios outlining how users might interact with the AI solution. Storyboards can visually depict these scenarios, facilitating discussions and identifying potential issues before development starts.

Prioritizing Learnings over Perfection

- Focus on the Core User Experience: Even without a fully functional AI prototype, you can test the core user experience flow and gather valuable feedback on how users might interact with the proposed AI solution.

- Iterate Based on Insights: Use the feedback from testing to refine your AI ideas. Prioritize learnings over creating a perfect low-fidelity prototype.

Strategy and Testing Business Ideas

Testing Business Ideas thoroughly, regardless of how great they may seem in theory, is a way to mitigate risks of your viability hypothesis being wrong (Photo by RF._.studio on Pexels.com)

Additional Tips

- Leverage Existing AI Tools: Explore readily available AI tools or APIs that might fulfill some of your envisioned functionalities. Testing these pre-built solutions can provide user feedback and inform the development of your custom AI solution later.

- Start Small & Scale Up: Consider piloting a small-scale AI application on a specific user group. This allows you to test the concept, gather real-world data, and gain valuable insights before investing in a large-scale implementation.

By employing these methods, designers, strategists, product managers, and business leaders can effectively test and validate their AI ideas even without coding skills. This iterative approach minimizes the risk of building features that don’t resonate with users, ultimately leading to more successful AI integrations within your product roadmap.

Ethical Considerations

The JTBD-AI framework offers a powerful approach to user-centric product development, but it’s crucial to integrate AI ethically and responsibly. Here, we’ll explore some key considerations, echoing the principles outlined in my previous blog post on building trustful experiences.

Fairness and Bias

When it comes to developing AI products, it is crucial that they are free from cognitive biases and real-life prejudices. Agrawal, Gans, and Goldfarb have pointed out that if biases seep into the decisions and recommendations made by AI, it can compromise the trustworthiness of these products. This could potentially harm users and perpetuate societal inequalities.

Any AI should be free of cognitive biases or real-life prejudices

Agrawal, A., Gans, J., & Goldfarb, A., Prediction machines: The simple economics of artificial intelligence (2018)

Agrawal, Gans, and Goldfarb highlight several key points related to eliminating biases and prejudices in AI systems:

- Data Bias: The authors emphasize that AI systems learn from historical data, which can contain inherent biases present in society. If the training data is biased, the AI system’s outputs may also reflect those biases, leading to unfair or discriminatory outcomes.

- Ethical Concerns: The presence of biases and prejudices in AI systems raises ethical concerns, as these systems can perpetuate and amplify societal inequalities. Addressing biases is crucial to ensure that AI technologies are fair and just in their decision-making.

- Algorithmic Fairness: The authors discuss the concept of algorithmic fairness, which involves designing AI algorithms to make decisions that are unbiased and do not discriminate against certain groups. Achieving algorithmic fairness requires careful consideration of how decisions are made and the potential impact on different demographic groups.

- Auditing and Transparency: Agrawal, Gans, and Goldfarb advocate for transparency and accountability in AI systems. They suggest that AI developers should regularly audit their systems to identify and rectify biases. Transparency about how AI systems arrive at decisions can help identify and address biases effectively.

- Diverse Development Teams: To combat biases and prejudices, the authors recommend building diverse development teams that can identify and address potential biases from different perspectives. Diverse teams are better equipped to consider a wide range of ethical and social implications during the AI development process.

- Regulation and Oversight: The authors suggest that regulatory frameworks should be developed to ensure that AI systems adhere to ethical standards and do not perpetuate biases. Proper oversight can help prevent negative consequences arising from biased AI applications.

So — while brainstorming for AI applications to address the job performers’ core needs — consider the following:

- Data Biases: As our previous post on AI principles discussed, biased data can lead to discriminatory outcomes in AI-powered products. When using JTBD for need identification, ensure you gather data from a diverse range of users to mitigate bias and promote fairness.

- Algorithmic Bias: Similarly, the design of AI algorithms can introduce bias. Be mindful of potential biases that might disadvantage certain user groups based on factors like race, gender, or socioeconomic background.

- Mitigating Bias: Employ techniques like data cleaning, fairness audits, and algorithmic adjustments to minimize bias in your AI solutions. This aligns with the principle of “Fairness” from our previous post, emphasizing the importance of considering diverse user needs and avoiding discrimination.

Transparency and Explainability

- “Black Box” Problem: Some AI algorithms can be complex and opaque, making it difficult for users to understand the reasoning behind decisions. This lack of transparency can erode user trust.

- Explainable AI: Seek to integrate explainable AI (XAI) techniques that allow users to understand the logic behind AI recommendations or decisions made within your product. This aligns with the “Transparency” principle from our previous post, advocating for clear communication and user understanding regarding AI interactions.

- User Control: Provide users with some level of control over AI interactions. Allow them to opt-out of personalized features or understand how AI is being used within the product. This empowers users and fosters a sense of agency, as advocated in the “User Control” principle from our previous discussion.

Privacy and Security

In most countries, the law does not force criminal suspects to self. incriminate. There is something perverse about making people complicit in their own downfall. A federal judge in California banned police from forcing suspects to swipe open their phones because it is analogous to self-incrimination.36 And yet we tolerate innocent netizens being forced to give up their personal data, which is then used in all sorts of ways contrary to their interests.

We should protect netizens at least as much as we protect criminal suspects.

Our personal data should not be used as a weapon against our best interests.

Véliz, C., Privacy is power: Why and how you should take back control of your data (2020)

Privacy and Data Protection, though connected, are commonly recognised all over the world as two separate rights. In Europe, they are considered vital components for a sustainable democracy (European Data Protection Supervisor, 2023):

- In the EU, human dignity is recognised as an absolute fundamental right. In this notion of dignity, privacy or the right to a private life, to be autonomous, in control of information about yourself, to be let alone, plays a pivotal role. Privacy is not only an individual right but also a social value, recognised as a universal human right, while data protection is not – at least not yet.

- Data protection is about protecting any information relating to an identified or identifiable natural (living) person, including names, dates of birth, photographs, video footage, email addresses and telephone numbers. Other information such as IP addresses and communications content – related to or provided by end-users of communications services – are also considered personal data.

- Privacy and data protection are two rights enshrined in the EU Treaties and in the EU Charter of Fundamental Rights.

The General Data Protection Regulation (GDPR) lists the rights of the data subject, meaning the rights of the individuals whose personal data is being processed. These strengthened rights give individuals more control over their personal data, including through (European Council, The general Data Protection Regulation, 2022):

- the need for an individual’s clear consent to the processing of his or her personal data

- easier access for the data subject to his or her personal data

- the right to rectification, to erasure and ‘to be forgotten’

- the right to object, including to the use of personal data for the purposes of ‘profiling’

- the right to data portability from one service provider to another

The regulation also lays down the obligation for controllers (those who are responsible for the processing of data) to provide transparent and easily accessible information to individuals on the processing of their data.

So — while brainstorming for AI applications to address the job performers’ core needs — consider the following:

- Data Collection and Usage: Be transparent about the data you collect through JTBD job maps and how you plan to use that data to train AI algorithms. Obtain explicit user consent for data collection and usage.

- Data Security: Implement robust security measures to protect user data from unauthorized access, breaches, or misuse.

- Data Minimization: Collect only the data necessary for JTBD analysis and AI training. Avoid collecting and storing sensitive user information that’s not essential for product functionality. This ensures responsible data handling, aligning with the “Privacy” and “Security” principles discussed before.

Human Oversight and Accountability

High-Level Expert Group on AI from the European Commission presented Ethics Guidelines for Trustworthy Artificial Intelligence, putting forward a set of 7 key requirements that AI systems should meet to be deemed trustworthy:

- Human agency and oversight: AI systems should empower human beings, allowing them to make informed decisions and fostering their fundamental rights. At the same time, proper oversight mechanisms need to be ensured, which can be achieved through human-in-the-loop, human-on-the-loop, and human-in-command approaches

- Technical Robustness and safety: AI systems need to be resilient and secure. They need to be safe, ensuring a fall back plan in case something goes wrong, as well as being accurate, reliable and reproducible. That is the only way to ensure that also unintentional harm can be minimized and prevented.

- Privacy and data governance: besides ensuring full respect for privacy and data protection, adequate data governance mechanisms must also be ensured, taking into account the quality and integrity of the data, and ensuring legitimised access to data.

- Transparency: the data, system and AI business models should be transparent. Traceability mechanisms can help achieving this. Moreover, AI systems and their decisions should be explained in a manner adapted to the stakeholder concerned. Humans need to be aware that they are interacting with an AI system, and must be informed of the system’s capabilities and limitations.

- Diversity, non-discrimination and fairness: Unfair bias must be avoided, as it could could have multiple negative implications, from the marginalization of vulnerable groups, to the exacerbation of prejudice and discrimination. Fostering diversity, AI systems should be accessible to all, regardless of any disability, and involve relevant stakeholders throughout their entire life circle.

- Societal and environmental well-being: AI systems should benefit all human beings, including future generations. It must hence be ensured that they are sustainable and environmentally friendly. Moreover, they should take into account the environment, including other living beings, and their social and societal impact should be carefully considered.

- Accountability: Mechanisms should be put in place to ensure responsibility and accountability for AI systems and their outcomes. Auditability, which enables the assessment of algorithms, data and design processes plays a key role therein, especially in critical applications. Moreover, adequate an accessible redress should be ensured

Building Trustworthy Experiences

To create trustworthy experiences, designers and strategists must adhere to a set of principles to create great experiences (Photo by Pavel Danilyuk on Pexels.com)

Call to Action

- The JTBD-AI framework you’ve just explored offers a powerful roadmap for leveraging AI to create user-centric solutions that drive engagement and success. Don’t let user needs remain hidden – harness the power of JTBD to uncover the core problems your users are trying to solve (their Jobs).

- Imagine the possibilities: AI can automate tedious tasks, personalize user experiences, and deliver intelligent recommendations – all focused on addressing those Jobs in a way that truly resonates with your users. This translates to increased user satisfaction, improved efficiency, and a competitive edge for your product.

- Don’t be intimidated by the complexity of AI. Start by revisiting your JTBD job maps and identify areas where AI can automate, personalize, analyze, or recommend solutions. Prioritize high-impact, achievable AI applications using the frameworks outlined in this blog post. By taking these initial steps, you’ll be well on your way to unlocking the power of AI and creating user-centric solutions that propel your product roadmap towards success.

Recommended Reading

Agrawal, A., Gans, J., & Goldfarb, A. (2018). Prediction machines: The simple economics of artificial intelligence. Boston, MA: Harvard Business Review Press.

Ashby, F. Gregory ; Isen, Alice M. & Turken, And U. (1999). A neuropsychological theory of positive affect and its influence on cognition. _Psychological Review_ 106

Bland, D. J., & Osterwalder, A. (2020). Testing business ideas: A field guide for rapid experimentation. Standards Information Network.

Brown, T., & Katz, B. (2009). Change by design: how design thinking transforms organizations and inspires innovation. [New York]: Harper Business

Briggs, J. (2016). JTBD Cards: Learning to interview customers (Second Edition). Crimson Sunbird LLP.

Cagan, M. (2017). Inspired: How to create tech products customers love (2nd ed.). Nashville, TN: John Wiley & Sons.

Cagan, M. (2020). The origin of product discovery. Retrieved February 7, 2022, from Silicon Valley Product Group website: https://svpg.com/the-origin-of-product-discovery/

Croll, A., & Yoskovitz, B. (2013). Lean Analytics: Use Data to Build a Better Startup Faster. O’Reilly Media.

Doerr, J. (2018). Measure what matters: How Google, Bono, and the gates foundation rock the world with okrs. Portfolio.

Frick, W. (2015, June). When Your Boss Wears Metal Pants. Harvard Business Review, 84–89.

IEEE (2019). Ethically Aligned Design: A Vision for Prioritizing Human Well-being with Autonomous and Intelligent Systems. Retrieved September 1, 2021, from The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems website: https://standards.ieee.org/content/dam/ieee-standards/standards/web/documents/other/ead_v2.pdf

Garbugli, É. (2020). Solving Product: Reveal Gaps, Ignite Growth, and Accelerate Any Tech Product with Customer Research. Wroclaw, Poland: Amazon.

Gothelf, J., & Seiden, J. (2021). Lean UX: Applying lean principles to improve user experience. Sebastopol, CA: O’Reilly Media.

Kalbach, J. (2020), “Mapping Experiences: A Guide to Creating Value through Journeys, Blueprints, and Diagrams“, 440 pages, O’Reilly Media; 2nd edition (15 December 2020)

Kalbach, J. (2020). Jobs to be Done Playbook (1st Edition). Two Waves Books.

Lombardo, C. T., McCarthy, B., Ryan, E., & Connors, M. (2017). Product Roadmaps Relaunched. Sebastopol, CA: O’Reilly Media.

Maeda, J. (2020). The laws of simplicity. London, England: MIT Press.

Moorman, J., (2012), “Leveraging the Kano Model for Optimal Results” in UX Magazine, captured 11 Feb 2021 from https://uxmag.com/articles/leveraging-the-kano-model-for-optimal-results

Noessel, C. (2017). Designing Agentive Technology. New York, USA: Rosenfeld Media.

Norman, D. A. (2005). “People, Places, and Things” in Emotional design: Why we love (or hate) everyday things (Paperback edition). New York, NY: Basic Books

Oberholzer-Gee, F. (2021). Better, simpler strategy: A value-based guide to exceptional performance. Boston, MA: Harvard Business Review Press.

Oberholzer-Gee, F. (2021). Eliminate Strategic Overload. Harvard Business Review, (May-June 2021), 11.

Olsen, D. (2015). The lean product playbook: How to innovate with minimum viable products and rapid customer feedback (1st ed.). Nashville, TN: John Wiley & Sons.

Osterwalder, A., Pigneur, Y., Papadakos, P., Bernarda, G., Papadakos, T., & Smith, A. (2014). Value proposition design: How to create products and services customers want. John Wiley & Sons.

Patton, J. (2014). User Story Mapping: Discover the whole story, build the right product (1st ed.). Sebastopol, CA: O’Reilly Media.

Patton, J. (2017). Dual Track Development is not Duel Track. Retrieved February 7, 2022, from Jpattonassociates.com website: https://www.jpattonassociates.com/dual-track-development/

Power, B. (2018, January 25). How to Get Employees to Stop Worrying and Love AI. Harvard Business Review. https://hbr.org/2018/01/how-to-get-employees-to-stop-worrying-and-love-ai

Sillence E, Briggs P, Harris P, Fishwick L. A framework for understanding trust factors in web-based health advice. International Journal of Human-Computer Studies. 2006;64:697–713.

Seiden, J. (2019). Outcomes Over Output: Why customer behavior is the key metric for business success. Independently published (April 8, 2019).

Sharon, T. (2016). Validating Product Ideas (1st Edition). Brooklyn, New York: Rosenfeld Media.

Spiek, C., & Moesta, B. (2014). The Jobs-to-be-Done Handbook: Practical techniques for improving your application of Jobs-to-be-Done. Createspace Independent Publishing Platform.

Stillman, D. (2020). Good Talk: How to Design Conversations That Matter. MGMT IMPACT PUB; 1st edition.

Todd, L. (2020). Adding a feature to Lyft: a UX case study. Retrieved 7 March 2022 from UX Collective https://uxdesign.cc/adding-a-feature-to-lyft-ux-design-project-c3c2afc518c1

Torres, T. (2021). Continuous Discovery Habits: Discover Products that Create Customer Value and Business Value. Product Talk LLC.

Torres, T, Gurion, H., “Defining Product Outcomes: The 8 Most Common Mistakes You Should Avoid.” Product Talk (blog), December 21, 2022. https://www.producttalk.org/2022/12/defining-product-outcomes/

Tractinsky, N., Katz, A. S., & Ikar, D. (2000). What is beautiful is usable. Interacting with Computers, 13(2), 127–145.

Ulwick, A. (2005). What customers want: Using outcome-driven innovation to create breakthrough products and services. Montigny-le-Bretonneux, France: McGraw-Hill.

Vartak, M. (2023, January 17). Achieving next-level value from AI by focusing on the operational side of machine learning. Forbes. https://www.forbes.com/sites/forbestechcouncil/2023/01/17/achieving-next-level-value-from-ai-by-focusing-on-the-operational-side-of-machine-learning/

Véliz, C. (2020). Privacy is power: Why and how you should take back control of your data. London, England: Penguin Random House.

Wilson, H. J., & Dougherty, P. R. (2018). Human + machine: Reimagining work in the age of AI. Boston, MA: Harvard Business Review Press.